Recently I have been given a short term assignment to develop a number of reports in Crystal Reports.

Upon arriving to the client location I quickly found out that the only tool I will be using to create the reports is basically the Crystal Reports itself.

My initial look at the database quickly revealed that the database structure is far from perfect and that it has some confusing table relationships, another hurdle was in the fact that several columns in various tables were user customizable for values to hold without any hint onto where the value is used and the relationship to other database objects.

I definitely had a challenge figuring out what and how the right SQL queries should be developed. To my dismay the matters were marred even further by my account being read-only so the possibility of creating database diagrams to visualize the data was not feasible.

After some short peering into what links Crystal Reports produces I realized I am lucking a tool that can close the gap.

Just as an aside, every morning I am normally reading a few blogs, one of them I literally read while having my first cup of coffee is an excellent one by Chris Alcock The Morning Brew in which it was just not long ago mentioned about the free tools for SQL Server by Aaron Bertrand. Bingo! I thought, I need to take them for a test drive! Not knowing anything about the tools I decided to download both.

A short wait for the downloads and Toad for SQL Server 5.0 gets installed first. Surprisingly, the free license is only for a few people in an organization (clever!). Well, it impressed me with a clean interface, but cluttered with panes and panels it quickly made me a little slow navigating here and there, my first 5 min and I think these are the most critical ones for the software spent and I see something – Object Explorer:

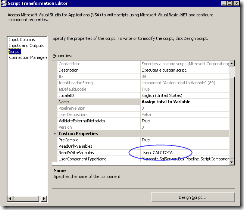

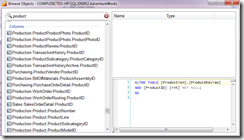

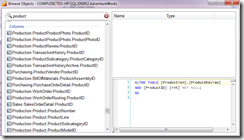

this must be the one I murmured to myself that has the ability to find an object based on its name. Nice, but it turned out to work only as a filter on the top level objects, so for example if a database is highlighted then applying a filter it will only show objects matching the criterion. Not particularly useful. At least for me, I needed to find where a specific column name is used. Hmm, let’s give SQL Everywhere a try! OK, it is installed in a snap! My first ehh, seconds, I find in View the Object Browser, good, search for my objects, whoa! I got a large set of results to go through! Nice, I got my columns, tables, indexes, functions and what not listed, gosh, even the T-SQL for this object as to be added is shown:

Cool, and besides, in addition a streamlined version is available through the Objects tab on the Explorer pane:

Double-clicking on the column name takes to it in a given table.

Good now we are ready to write some useful SQL queries.

Well, I did not want to give up yet on Toad for SQL Server and went on to writing a complex join. So I am typing in SELE… hmm, I notice the intellisense does not pop up with suggestions, well all right, after all I know how to type, now seems it does not support suggesting on keywords, or it may be my impression. Now when I arrived to the join part to another table the intellisense does show up and tries to guess my join, and to my amusement the choice at the top actually makes sense. Not bad! Now I try to do the same in SQL Everywhere and and start with typing SELE… wow wait what is going on? Ahm three choices appeared: scf, ssf and st100, I hit tab and get nice! Similarly ssf expands to and respectively.

Not a bad productivity improvement for free!

OK, now some ugly T-SQL Code is churned I would be ashamed to copy and paste to a Command in Crystal Reports, let’s beatify it first! OK, I still love Toad, ahm, where SQL Format or something like that is? It is there but disabled, well, turned out it is disabled in SQL Everywhere too, pity! No problem, I can that even without installing a single piece of software, going to http://www.sqlinform.com/online.phtml and whoa I have my long SQL looking good!

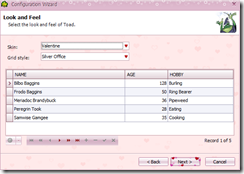

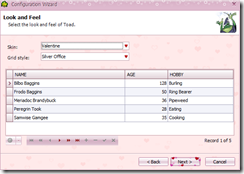

I thought I deserve to rest and I started to play around with the settings, surprisingly, both editors offered a lot of customization, Toad even made me laugh by offering a pumpkin skin and a Valentine’s skin:

What a jolly amphibian  !

!

(I guess the creators of it thought a developer would change its appearance based on kind of a holiday approaching).

SQL Everywhere sports a more humble approach to its appearance and to me it looks more tasteful and more importantly, practical. These are simply color schemes.

As far as the productivity goes, both tools offer a good code snippets support, though SQL Everywhere has a more functional set of snippets, one thing, it is only very short.

Now let’s highlight some interesting differences. The first that comes to mind is how the tools handle the connection to the database. While SQL Everywhere offers an on-demand connection (default) thus unloading the server, Toad seems to not to offer any customization of this kind. Others are “As early as possible” and “On First Use”.

Another nifty feature is “Execute Here” (if you click on a database name). It is particularly useful if you want to run the same SQL statement against one database and the another, for this purpose I guess it offers data set comparison features but it was not available in the free editions, I guess it would be as easy to do by dumping the results to a file and compare using say ExamDiff.

For database administrators viewing active processes in SQL Everywhere (by pressing F2)

is just so simple it blows the mind compare to how you get this in SSMS, sure this feature is gonna be loved!

In closing, I would highlight some features I found lacking or not to may satisfactory:

First, I am bothered a lot by both IDEs not able to output the results of a query to text. However it is easily compensated by the “export to csv” functionality.

Second, is inability to edit data right in the grid in SQL Everywhere.

Third, while SQL Everywhere offered search within the grid with results, none of this appeared working in Toad (I am curious if this does work in the paid version of the product).

Speaking of the prices, Toad’s Pro version (the cheapest option) is $595 USD vs. $199 for SQL Everywhere, a big leap for not so much more I am tempted to say, also curious if this is the result of the so called “Bran Recognition” paradox marketing?

Well, all in all, both tools are a very good compliment to the SSMS and I hope will wet your appetite for exploring them more and contributing to your bottom line!

As it stands now, my first choice is SQL Everywhere for more flexible, practical and streamlined (e.g. macros support) database development experience.